Computational tool detects clinically relevant mutations from tumor images

In Brief

- A deep learning computer program detected the presence of molecular and genetic alterations based only on tumor images across multiple cancer types, including head and neck cancer.

- The approach might make cancer diagnosis faster and less expensive and help clinicians deliver earlier personalized treatment to patients.

From apps that vocalize driving directions to virtual assistants that play songs on command, artificial intelligence or AI — a computer’s ability to simulate human intelligence and behavior — is becoming part of our everyday lives. Medicine also stands to benefit from AI. In recent years, researchers have been exploring the use of such tools to help clinicians diagnose and treat diseases, including cancer.

In a study supported in part by NIDCR, an international research team showed that a type of artificial intelligence called deep learning successfully detected the presence of molecular and genetic alterations based only on tumor images across 14 cancer types, including those of the head and neck. The findings, published in the August issue of Nature Cancer, raise the possibility that deep learning could be adapted by clinicians to more rapidly and cheaply deliver personalized cancer care.

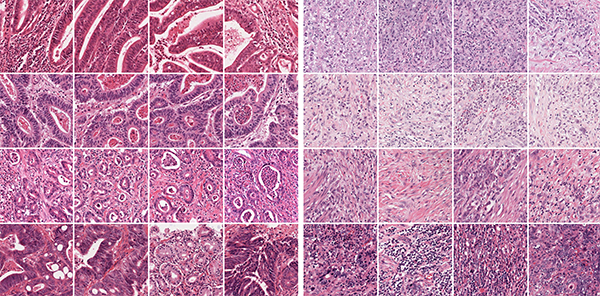

Traditionally, many cancers are diagnosed by surgically removing a tissue sample from the area in question and examining thin slices on a slide under a microscope. Using this method, pathologists can recognize cancer based on the size, shape, and structure of the tissue and cells. Recent advances in molecular and genetic testing allow clinicians to tailor treatment to the unique profile of a patient’s tumor. However, these advanced tests can be costly and take days or even weeks to process, limiting their availability to many patients.

In the current study, the scientists set out to overcome these hurdles by harnessing the computational power of deep learning. “We asked if it’s possible to molecularly subtype a patient’s cancer based only on slide images of tumors,” explains Alexander Pearson, MD, PhD, an assistant professor of medicine at the University of Chicago. Pearson is co-lead of the study, along with gastrointestinal oncology researchers Tom Luedde, MD, PhD, and Jakob Nikolas Kather, MD, MSc, of Aachen University in Germany. Pearson’s work was funded by an NIDCR K08 award, designed to support research training for individuals with clinical doctoral degrees.

The team’s rationale is based on evidence that cancerous genetic alterations cause changes in tumor cell behavior, which in turn affects cell shape, size, and structure. If so, the scientists hypothesized, these features might be apparent in slide images and detectable by a computer.

Pearson and Kather, who have expertise in quantitative science, set to work developing a computer algorithm capable of detecting such changes using publicly available tumor images and corresponding genetic and molecular information.

Once the researchers were satisfied with the program’s predictive powers, they tested whether it could detect molecular alterations directly from tissue images of more than 5,000 patients across 14 cancer types, including those of the head and neck. These anonymous patient images and data came from The Cancer Genome Atlas (TCGA) database, a National Cancer Institute portal containing molecular characterizations of 20,000 patient samples spanning 33 cancer types.

“We had the algorithm focus exclusively on alterations that are clinically actionable, meaning there’s scientific evidence to support their use to inform patient care,” says Pearson. For many of the alterations used in the study, drugs targeting them are already FDA-approved or currently being tested in clinical trials.

The deep learning program successfully predicted a range of genetic and molecular changes across all 14 cancer types tested. For example, the algorithm detected with high accuracy a mutated form of the TP53 gene, thought to be a main driver of head and neck cancer. It also accurately predicted the presence of standard molecular markers such as hormone receptors in breast cancer. Hormone receptor status is an important factor in guiding treatment options for patients with breast cancer.

“We demonstrated the feasibility of using deep learning to infer genetic and molecular alterations, including driver mutations responsible for carcinogenesis, from routine tissue slide images,” Pearson says. According to the authors, the deep learning program could be optimized for use on mobile devices, which might one day be easily adopted by clinicians.

Pearson stresses, however, that the program isn’t quite ready for clinical use. He and his colleagues are working to improve its accuracy, in part by re-training it on a larger number of patient samples and validating it against non-TCGA datasets.

Nevertheless, “the findings open up a path toward more rapid and less costly cancer diagnoses,” says Pearson. “It’s our hope that computational tools like ours could help clinicians develop earlier and more widely accessible personalized treatment plans for patients."

Related Links

References

Pan-cancer image-based detection of clinically actionable genetic alterations. Kather JN, Heij LR, Grabsch HI, Loeffler C, Echle A, Muti HS, Krause J, Niehues JM, Sommer KAJ, Bankhead P, Kooreman LFS, Schulte JJ, Cipriani NA, Buelow RD, Boor P, Ortiz-Bruchle N, Hanby AM, Speirs V, Kochanny S, Patnaik A, Srisuwananukorn A, Brenner H, Hoffmeister M, van den Brandt PA, Jager D, Trautwein C, Pearson AT, Luedde T. Nature Cancer. July 27 2020.

Attention Editors

Reprint this article in your own publication or post to your website. NIDCR News articles are not copyrighted. Please acknowledge NIH's National Institute of Dental and Craniofacial Research as the source.

Subscribe to NIDCR Science News

Receive monthly email updates about NIDCR-supported research advances by subscribing to NIDCR Science News.